Artificial intelligence (AI) and large language models (LLMs) are going to shake up many industries. In a domain where the written word is the product, what could be more fitting than a technology that can generate and manipulate text at scale? There is a mixture of nervousness and excitement about the possibilities and threats they pose.

At Arq Works, we have been working with LLMs since the watershed moment last November when OpenAI launched ChatGPT. The capability of LLMs to answer queries and simulate human-like text generation seemed almost magical. However, after working with them over the last several months there are clear limitations to the current generation. While the potential of LLMs is vast, their real-world application is not without significant challenges particularly when trying to integrate them into existing workflows and processes.

At the end of last year, our roadmap for the coming 12 months was thrown away and replaced with a whole host of AI-backed features. Some of these were driven out of our planning and some came from customer requests, most have been successfully implemented but in many cases, the initial promise took plenty of fine-tuning and some have been dropped(for the moment….). Below are some of the technical limitations we have encountered.

Lack of Intuitive Reasoning

LLMs don’t “understand” context or content the way humans do. Their responses are based on patterns in data, not genuine comprehension. This limitation becomes clear when they’re asked questions that require intuition or common-sense reasoning.

Scenario: We asked LLMs to extract legal acts from text sections, we thought this would be something that large language models would be good at. The LLM was given the source text and several examples of act references and structure.

Observations: given the following text…

“The demand for organic agricultural products has surged in recent years, reflecting a growing consumer preference for natural and sustainable food sources.”

….the LLM would return that this mentions the “Agricultural Product Standards Act 28 of 1999” where it has not only identified an Act that was not directly mentioned it has also made up a reference to an Act that does not exist. When asked to provide its reasoning the LLMs would say that the text could apply potentially apply to this act. In this case, as we are not able to provide a complete list of acts to the system we reverted to a traditional text-matching approach.

Inconsistency in Outputs

One of the primary hurdles in working with LLMs is the lack of predictable consistency. Given the same prompt at different times and different content, the model might produce varying responses. While this can be seen as a strength, allowing for diverse outputs, it becomes a liability when consistency and standardization are needed particularly when trying to integrate the output of the LLM into an existing workflow.

Scenario: We tasked the model with a simple binary classification, asking, “Would the subject ‘Business and Finance’ apply to this text?” The expectation was a straightforward “Yes” or “No.”

Observations: While at times the model would provide a concise “Yes” or “No” as expected, there were instances where it would add qualifiers. For example, it might respond with statements like, “It does not conform to that subject for reason X.” Such variations introduce an element of unpredictability, especially when building systems that rely on standardized responses.

OpenAI has improved this situation recently by allowing you to specify a content model (function) for the returned data but whilst this is a massive help it is still not 100% predictable.

The Potential Misuse of LLMs in Generating Misleading Content

If the output of our integrations with LLMs is going to be presented to end users then we have to be clear on our systems and with our publishers that it must be flagged as being generated by AI. At present where we are generating content, it is a starting point and will be edited/re-written by a human but we are now starting to roll out features where users interact with the AI directly. In all of our features, we always provide source documents for the AI to work from, this means that you reduce the likelihood of AI making up information (known as hallucinating). However, that text may not contain all the context needed to answer a particular question and the answer provided may not be correct in all cases. Even if you have a massive disclaimer above the output it is likely that someone will copy and paste the answer verbatim. There are examples of this from many of the systems that are already live.

It is also possible to Jailbreak AI systems, this is the process of getting the AI to ignore the original instruction and output whatever the malicious user wants. You then have the potential reputational damage as screenshots of your brand and shiny new AI system going way off message and saying whatever the malicious user desires. You can add in layers to prevent this but there are also examples online from most of the live systems where they have been circumvented.

The Context Window Challenge: LLMs and Lengthy Texts

Another current limitation LLMs like is their fixed context window size. This “window” determines how much text the model can consider at any given moment. Depending on the model you are calling this ranges from a couple of thousand words up to tens of thousands.

When working with expansive texts, such as novels or in-depth research papers, this limitation becomes particularly apparent. For instance, if an LLM is provided with a snippet from the middle of a novel and asked for an analysis, it lacks a broader understanding of events or character developments from earlier chapters. Consequently, its insights may be shallow or disjointed, missing nuanced references or overarching themes.

This context limitation poses challenges for tasks that require a complete grasp of large documents. While readers can remember and reference events from earlier parts of a book when they’re many chapters deep, an LLM is constrained by its window, making it blinkered to the broader narrative or the full scope of the content.

At Arq Works, we create a summary of the document that fits the model which we are calling. With longer documents, this step can lead to the potential loss of information. As new models are released these context windows are getting bigger so it is an issue that will eventually be resolved.

Difficulty in System Integration

From a technical standpoint, integrating LLMs into existing systems can be cumbersome. At present the costs of running your own LLMs are prohibitive for an organization the size of Arq Works. The models that are available to run locally are not yet as powerful as those provided by the current market leaders(OpenAI and Google). So at present, we all rely on a couple of API providers for these services.

Understandably whilst those providers are scaling up to try to cater for demand sometimes these services are occasionally slow or unresponsive, meaning so performing actions at scale can take longer than you would expect. OpenAI also limits the number of requests each account can make per minute and they start with a very low spending limit on each account which you have to request is increased in several steps. This makes sense, they are trying to scale the service up and create a market…. it would be interesting to know if they are making any profit on these requests, if not that is a potential risk for the future if the services are not sustainable.

The Positives: Areas Where Language Models and AI Have Quickly Added Value

Even with the difficulties mentioned above, Large Language Models and AI are great tools which are only getting better, and which provide us with a continued learning curve. While it’s essential to understand and navigate the limitations, they have opened up whole areas of development that would have been impossible before they existed. Arq Works has successfully integrated LLMs into our Content Management System Arq Plus and have several live workflows and features where they are being actively used. A few of these are highlighted below.

Efficient Text Summarization

One of the standout applications of LLMs at Arq Works has been text summarization. We’ve been able to condense whole books of text into concise, coherent summaries. We have created functions in our Content Management System Arq Plus that can create summaries of documents of any length. These can be output in several personas and you can customize the length. Examples of real-world situations where this is already deployed in publisher workflows include Chapter Summaries, Marketing Descriptions, and Legal Summaries of document sections.

Content Classification

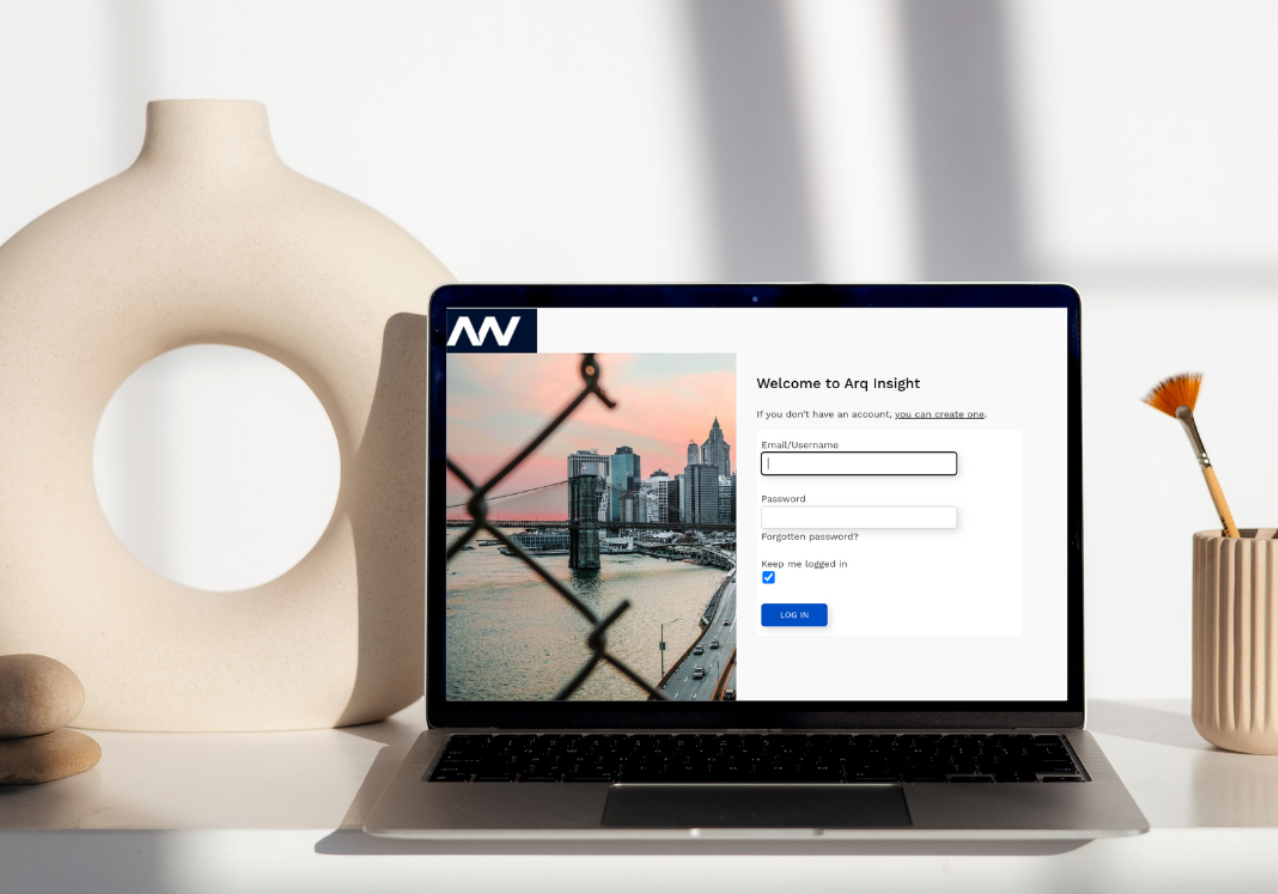

By using LLMs, we can categorize content into industry-standard Subject codes such as BISAC And Thema. This process is in the testing phase and we have a free-to-try service (Arq Insight) if you have an epub, pdf or doc that you would like summarized and classified using BISAC then give it a try and let us know how it goes.

Automated Question Generation for CPD

Professional development materials often include end-of-chapter questions to gauge understanding and retention. Creating these questions can be a meticulous task, but with LLMs, we’ve been able to streamline the process. We have employed AI to generate questions for continued professional development materials. While these questions provide an excellent starting point, a human still steps in to add nuance and check accuracy.

Conclusions

At Arq Works, we view large language models as valuable collaborators. They are tools that can reduce efforts, drive operational efficiency and help create new products and services. Our journey with LLMs underscores a balanced approach—embracing their strengths and acknowledging their limitations. In addition to our existing services, we have several AI-backed features that will be launching in the next few months. Do reach out if you would like to find out more drop me a message on LinkedIn or Contact Us